This document assumes you have already installed Python 3, and you have used both pip and venv. If not, refer to these instructions.

PythonForBeginners.com offers free content for those looking to learn the Python programming language. We offer the above Python Tutorial with over 4,000 words of content to help cover all the basics. We also offer an email newsletter that provides more tips and tricks to solve your programming objectives. However, if you want to learn Python or are new to the world of programming, it can be quite though getting started. There are so many things to learn: coding, object orienated programming, building desktop apps, creating web apps with Flask or Django, learning how to plot and even how to use Machine Learning or Artificial Intelligence.

- 2021-05-26 An introduction to Web Scraping with Python and Azure Functions - PyLadies Amsterdam 2021- 05-27 Conf42 Python 2021 2021- 06-02 DjangoCon Europe 2021.

- Python projects for beginners A collection of practical projects for you to learn Python. Python web development, game development, data science and more.

- By the end of this tutorial, you will have a grasp of the essentials for extracting data from most of the websites on the internet. This includes the usage of BeautifulSoup for getting elements through patterns, Browser DevTools for pattern investigation, and Requests for managing the interface with the servers. This course will be useful for anyone dealing with extracting web data from pages.

Sweigart briefly covers scraping in chapter 12 of Automate the Boring Stuff with Python (second edition).

This chapter here and the two following chapters provide additional context and examples for beginners.

BeautifulSoup documentation:

Setup for BeautifulSoup¶

BeautifulSoup is a scraping library for Python. We want to run all our scraping projects in a virtual environment, so we will set that up first. (Students have already installed Python 3.)

Create a directory and change into it¶

The first step is to create a new folder (directory) for all your scraping projects. Mine is: Umax usb devices driver download for windows.

Do not use any spaces in your folder names. If you must use punctuation, do not use anything other than an underscore _. It’s best if you use only lowercase letters.

Change into that directory. For me, the command would be:

Create a new virtualenv in that directory and activate it¶

Create a new virtual environment there (this is done only once).

MacOS:

Windows:

Activate the virtual environment:

MacOS:

Windows:

Important: You should now see (env) at the far left side of your prompt. This indicates that the virtual environment is active. For example (MacOS):

When you are finished working in a virtual environment, you should deactivate it. The command is the same in MacOS or Windows (DO NOT DO THIS NOW):

You’ll know it worked because (env) will no longer be at the far left side of your prompt.

Install the BeautifulSoup library¶

In MacOS or Windows, at the command prompt, type:

This is how you install any Python library that exists in the Python Package Index. Pretty handy. pip is a tool for installing Python packages, which is what you just did.

Note

You have installed BeautifulSoup in the Python virtual environment that is currently active. When that virtual environment is not active, BeautifulSoup will not be available to you. This is ideal, because you will create different virtual environments for different Python projects, and you won’t need to worry about updated libraries in the future breaking your (past) code.

Test BeautifulSoup¶

Start Python. Because you are already in a Python 3 virtual environment, Mac users need only type python (NOT python3). Windows users also type python as usual.

You should now be at the >>> prompt — the Python interactive shell prompt.

In MacOS or Windows, type (or copy/paste) one line at a time:

You imported two Python modules,

urlopenandBeautifulSoup(the first two lines).You used

urlopento copy the entire contents of the URL given into a new Python variable,page(line 3).You used the

BeautifulSoupfunction to process the value of that variable (the plain-text contents of the file at that URL) through a built-in HTML parser calledhtml.parser.The result: All the HTML from the file is now in a BeautifulSoup object with the new Python variable name

soup. (It is just a variable name.)Last line: Using the syntax of the BeautifulSoup library, you printed the first

h1element (including its tags) from that parsed value.

If it works, you’ll see:

Check out the page on the web to see what you scraped.

Attention

If you got an error about SSL, quit Python (quit() or Command-D) and COPY/PASTE this at the command prompt (MacOS only):

Then return to the Python prompt and retry the five lines above.

The command soup.h1 would work the same way for any HTML tag (if it exists in the file). Instead of printing it, you might stash it in a variable:

Then, to see the text in the element without the tags:

Understanding BeautifulSoup¶

BeautifulSoup is a Python library that enables us to extract information from web pages and even entire websites.

We use BeautifulSoup commands to create a well-structured data object (more about objects below) from which we can extract, for example, everything with an <li> tag, or everything with class='book-title'.

After extracting the desired information, we can use other Python commands (and libraries) to write the data into a database, CSV file, or other usable format — and then we can search it, sort it, etc.

What is the BeautifulSoup object?¶

It’s important to understand that many of the BeautifulSoup commands work on an object, which is not the same as a simple string.

Many programming languages include objects as a data type. Python does, JavaScript does, etc. An object is an even more powerful and complex data type than an array (JavaScript) or a list (Python) and can contain many other data types in a structured format.

When you extract information from an object with a BeautifulSoup command, sometimes you get a single Tag object, and sometimes you get a Python list (similar to an array in JavaScript) of Tag objects. The way you treat that extracted information will be different depending on whether it is one item or a list (usually, but not always, containing more than one item).

That last paragraph is REALLY IMPORTANT, so read it again. For example, you cannot call .text on a list. You’ll see an error if you try it.

How BeautifulSoup handles the object¶

In the previous code, when this line ran:

… you copied the entire contents of a file into a new Python variable named page. The contents were stored as an HTTPResponse object. We can read the contents of that object like this:

… but that’s not going to be very usable, or useful — especially for a file with a lot more content in it.

When you transform that HTTPResponse object into a BeautifulSoup object — with the following line — you create a well-structured object from which you can extract any HTML element and the text and/or attributes within any HTML element.

Some basic BeautifulSoup commands¶

Let’s look at a few examples of what BeautifulSoup can do.

Finding elements that have a particular class¶

Deciding the best way to extract what you want from a large HTML file requires you to dig around in the source, using Developer Tools, before you write the Python/BeautifulSoup commands. In many cases, you’ll see that everything you want has the same CSS class on it. After creating a BeautifulSoup object (here, as before, it is soup), this line will create a Python list containing all the <td> elements that have the class city.

Attention

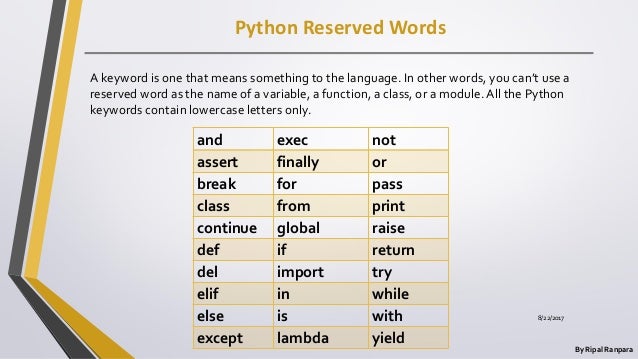

The word class is a reserved word in Python. Using class (alone) in the code above would give you a syntax error. So when we search by CSS class with BeautifulSoup, we use the keyword argument class_ — note the added underscore. Other HTML attributes DO NOT need the underscore.

Maybe there were 10 cities in <td> tags in that HTML file. Maybe there were 10,000. No matter how many, they are now in a list (assigned to the variable city_list), and you can search them, print them, write them out to a database or a JSON file — whatever you like. Often you will want to perform the same actions on each item in the list, so you will use a normal Python for-loop:

.get_text() is a handy BeautifulSoup method that will extract the text — and only the text — from the Tag object. If instead you wrote just print(city), you’d get the complete <td> — and any other tags inside that as well.

Note

The BeautifulSoup methods .get_text() and .getText() are the same. The BeautifulSoup property .text is a shortcut to .get_text() and is acceptable unless you need to pass arguments to .get_text().

Finding all vs. finding one¶

The BeautifulSoup find_all() method you just saw always produces a list. (Note: findAll() will also work.) If you know there will be only one item of the kind you want in a file, you should use the find() method instead.

For example, maybe you are scraping the address and phone number from every page in a large website. In this case, there is only one phone number on the page, and it is enclosed in a pair of tags with the attribute id='call'. One line of your code gets the phone number from the current page:

You don’t need to loop through that result — the variable phone_number will contain only one Tag object, for whichever HTML tag had that ID. To test what the text alone will look like, just print it using get_text() to strip out the tags.

Notice that you’re often using soup. Review above if you’ve forgotten where that came from. (You may use another variable name instead, but soup is the usual choice.)

Finding the contents of a particular attribute¶

One last example from the example page we have been using.

Say you’ve made a BeautifulSoup object from a page that has dozens of images on it. You want to capture the path to each image file on that page (perhaps so that you can download all the images). I would do this in two steps:

First, you make a Python list containing all the

imgelements that exist in thesoupobject.Second, you loop through that list and print the contents of the

srcattribute from eachimgtag in the list.

It is possible to condense that code and do the task in two lines, or even one line, but for beginners it is clearer to get the list of elements and name it, then use the named list and get what is wanted from it.

Important

We do not need get_text() in this case, because the contents of the src attribute (or any HTML attribute) are nothing but text. There are never tags inside the src attribute. So think about exactly what you’re trying to get, and what is it like inside the HTML of the page.

You can see the code from above all in one file.

There’s a lot more to learn about BeautifulSoup, and we’ll be working with various examples. You can always read the docs. Most of what we do with BeautifulSoup, though, involves these tasks:

Find everything with a particular class

Find everything with a particular attribute

Find everything with a particular HTML tag

Find one thing on a page, often using its

idattributeFind one thing that’s inside another thing

A BeautifulSoup scraping example¶

To demonstrate the process of thinking through a small scraping project, I made a Jupyter Notebook that shows how I broke down the problem step by step, and tested one thing at a time, to reach the solution I wanted. Open the notebook here on GitHub to follow along and see all the steps. (If that link doesn’t work, try this instead.)

The code in the final cell of the notebook produces this 51-line CSV file by scraping 10 separate web pages.

To run the notebook, you will need to have installed the Requests module and also Jupyter Notebook.

See these instructions for information about how to run Jupyter Notebooks.

Attention

After this introduction, you should NOT use fromurllib.requestimporturlopen or the urlopen() function. Instead, you will use requests as demonstrated in the notebook linked above.

Next steps¶

In the next chapter, we’ll look at how to handle common web scraping projects with BeautifulSoup and Requests.

.

A growing number of business activities and our lives are being spent online, this has led to an increase in the amount of publicly available data. Web scraping allows you to tap into this public information with the help of web scrapers.

In the first part of this guide to basics of web scraping you will learn – Touchstone technoligy driver download for windows.

- What is web scraping?

- Web scraping use cases

- Types of web scrapers

- How does a web scraper work?

- Difference between a web scraper and web crawler

- Is web scraping legal?

What is web scraping?

Web scraping automates the process of extracting data from a website or multiple websites. Web scraping or data extraction helps convert unstructured data from the internet into a structured format allowing companies to gain valuable insights. This scraped data can be downloaded as a CSV, JSON, or XML file.

Web scraping (or Data Scraping or Data Extraction or Web Data Extraction used synonymously), helps transform this content on the Internet into structured data that can be consumed by other computers and applications. The scraped data can help users or businesses to gather insights that would otherwise be expensive and time-consuming.

Since the basic idea of web scraping is automating a task, it can be used to create web scraping APIs and Robotic Process Automation (RPA) solutions. Web scraping APIs allow you to stream scraped website data easily into your applications. This is especially useful in cases where a website does not have an API or has a rate/volume-limited API.

Uses of Web Scraping

People use web scrapers to automate all sorts of scenarios. Web scrapers have a variety of uses in the enterprise. We have listed a few below:

- Price Monitoring –Product data is impacting eCommerce monitoring, product development, and investing. Extracting product data such as pricing, inventory levels, reviews and more from eCommere websites can help you create a better product strategy.

- Marketing and Lead Generation –As a business, to reach out to customers and generate sales, you need qualified leads. That is getting details of companies, addresses, contacts, and other necessary information. Publicly information like this is valuable. Web scraping can enhance the productivity of your research methods and save you time.

- Location Intelligence – The transformation of geospatial data into strategic insights can solve a variety of business challenges. By interpreting rich data sets visually you can conceptualize the factors that affect businesses in various locations and optimize your business process, promotion, and valuation of assets.

- News and Social Media – Social media and news tells your viewers how they engage with, share, and perceive your content. When you collect this information through web scraping you can optimize your social content, update your SEO, monitor other competitor brands, and identify influential customers.

- Real Estate – The real estate industry has myriad opportunities. Including web scraped data into your business can help you identify real estate opportunities, find emerging markets analyze your assets.

Python Web Scraping Tutorial

How to get started with web scraping

There are many ways to get started with web scraper, writing code from scratch is fine for smaller data scraping needs. But beyond that, if you need to scrape a few different types of web pages and thousands of data fields, you will need a web scraping service that is able to scrape multiple websites easily on a large scale.

Custom Web Scraping Services

Many companies build their own web scraping departments but other companies use Web Scraping services. While it may make sense to start an in house web scraping solution, the time and cost involved far outweigh the benefits. Hiring a custom web scraping service ensures that you can concentrate on your projects.

Web scraping companies such as ScrapeHero, have the technology and scalability to handle web scraping tasks that are complex and massive in scale – think millions of pages. You need not worry about setting up and running scrapers, avoiding and bypassing CAPTCHAs, rotating proxies, and other tactics websites use to block web scraping.

Web Scraping Tools and Software

Point and click web scraping tools have a visual interface, where you can annotate the data you need, and it automatically builds a web scraper with those instructions. Web Scraping tools (free or paid) and self-service applications can be a good choice if the data requirement is small, and the source websites aren’t complicated.

ScrapeHero Cloud has pre-built scrapers that in addition to scraping search engine data, can Scrape Job data, Scrape Real Estate Data, Scrape Social Media and more. These scrapers are easy to use and cloud-based, where you need not worry about selecting the fields to be scraped nor download any software. The scraper and the data can be accessed from any browser at any time and can deliver the data directly to Dropbox.

Web Scraping Projects In Python For Beginners

Scraping Data Yourself

You can build web scrapers in almost any programming language. It is easier with Scripting languages such as Javascript (Node.js), PHP, Perl, Ruby, or Python. If you are a developer, open-source web scraping tools can also help you with your projects. If you are just new to web scraping these tutorials and guides can help you get started with web scraping.

If you don't like or want to code, ScrapeHero Cloud is just right for you!

Skip the hassle of installing software, programming and maintaining the code. Download this data using ScrapeHero cloud within seconds.

How does a web scraper work

A web scraper is a software program or script that is used to download the contents (usually text-based and formatted as HTML) of multiple web pages and then extract data from it.

Web scrapers are more complicated than this simplistic representation. Thrc driver download. They have multiple modules that perform different functions.

What are the components of a web scraper

Web scraping is like any other Extract-Transform-Load (ETL) Process. Web Scrapers crawl websites, extracts data from it, transforms it into a usable structured format, and loads it into a file or database for subsequent use.

A typical web scraper has the following components:

1. Crawl

First, we start at the data source and decide which data fields we need to extract. For that, we have web crawlers, that crawl the website and visit the links that we want to extract data from. (e.g the crawler will start at https://scrapehero.com and crawl the site by following links on the home page.)

The goal of a web crawler is to learn what is on the web page, so that the information when it is needed, can be retrieved. The web crawling can be based on what it finds or it can search the whole web (just like the Google search engine does).

2. Parse and Extract

Extracting data is the process of taking the raw scraped data that is in HTML format and extracting and parsing the meaningful data elements. In some cases extracting data may be simple such as getting the product details from a web page or it can get more difficult such as retrieving the right information from complex documents.

You can use data extractors and parsers to extract the information you need. There are different kinds of parsing techniques: Regular Expression, HTML Parsing, DOM Parsing (using a headless browser), or Automatic Extraction using AI.

3. Format

Now the data extracted needs to be formatted into a human-readable form. These can be in simple data formats such as CSV, JSON, XML, etc. You can store the data depending on the specification of your data project.

The data extracted using a parser won’t always be in the format that is suitable for immediate use. Most of the extracted datasets need some form of “cleaning” or “transformation.” Regular expressions, string manipulation, and search methods are used to perform this cleaning and transformation.

4. Store and Serialize Data

After the data has been scraped, extracted, and formatted you can finally store and export the data. Once you get the cleaned data, it needs to be serialized according to the data models that you require. Choosing an export method largely depends on how large your data files are and what data exports are preferred within your company.

This is the final module that will output data in a standard format that can be stored in Databases using ETL tools (Check out our guide on ETL Tools), JSON/CSV files, or data delivery methods such as Amazon S3, Azure Storage, and Dropbox.

Web Crawling vs. Web Scraping

People often use Web Scraping and Web Crawling interchangeably. Although the underlying concept is to extract data from the web, they are different.

Web Crawling mostly refers to downloading and storing the contents of a large number of websites, by following links in web pages. A web crawler is a standalone bot, that scans the internet, searching, and indexing for content. In general, a ‘crawler’ means the ability to navigate pages on its own. Crawlers are the backbones of search engines like Google, Bing, Yahoo, etc.

A Web scraper is built specifically to handle the structure of a particular website. The scraper then uses this site-specific structure to extract individual data elements from the website. Unlike a web crawler, a web scraper extracts specific information such as pricing data, stock market data, business leads, etc.

Is web scraping legal?

Although web scraping is a powerful technique in collecting large data sets, it is controversial and may raise legal questions related to copyright and terms of service. Most times a web scraper is free to copy a piece of data from a web page without any copyright infringement. This is because it is difficult to prove copyright over such data since only a specific arrangement or a particular selection of the data is legally protected.

Legality is totally dependent on the legal jurisdiction (i.e. Laws are country and locality specific). Publicly available information gathering or scraping is not illegal, if it were illegal, Google would not exist as a company because they scrape data from every website in the world.

Terms of Service

Although most web applications and companies include some form of TOS agreement, it lies within a gray area. For instance, the owner of a web scraper that violates the TOS may argue that he or she never saw or officially agreed to the TOS

Some forms of web scraping can be illegal such as scraping non-public data or disclosed data. Non-public data is something that isn’t reachable or open to the public. An example of this would be, the stealing of intellectual property.

Ethical Web Scraping

If a web scraper sends data acquiring requests too frequently, the website will block you. The scraper may be refused entry and may be liable for damages because the owner of the web application has a property interest. An ethical scraping tool or professional web scraping services will avoid this issue by maintaining a reasonable requesting frequency. We talk in other guides about how you can make your scraper more “polite” so that it doesn’t get you into trouble.

What’s next?

Let’s do something hands-on before we get into web page structures and XPaths. We will make a very simple scraper to scrape Reddit’s top pages and extract the title and URLs of the links shared.

Check out part 2 and 3 of this post in the link here – A beginners guide to Web Scraping: Part 2 – Build a web scraper for Reddit using Python and BeautifulSoup

Web Scraping Tutorial for Beginners – Part 3 – Navigating and Extracting Data – Navigating and Scraping Data from Reddit

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data